-

Posts

877 -

Joined

-

Last visited

Profile Information

-

Gender

Not Telling

Recent Profile Visitors

4,927 profile views

-

h.ozboluk reacted to a post in a topic:

Blend tool in Designer

h.ozboluk reacted to a post in a topic:

Blend tool in Designer

-

Blend tool in Designer

rui_mac replied to Athanasius Pernath's topic in Feedback for the Affinity V2 Suite of Products

I requested for a Blend Tool when Affinity was still in the beta stage. That was MANY, MANY YEARS AGO!!!!- 219 replies

-

- blend tool

- blend

-

(and 1 more)

Tagged with:

-

rui_mac reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

rui_mac reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

rui_mac reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

rui_mac reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

NotMyFault reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

NotMyFault reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

rui_mac reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

rui_mac reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

R C-R reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

R C-R reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

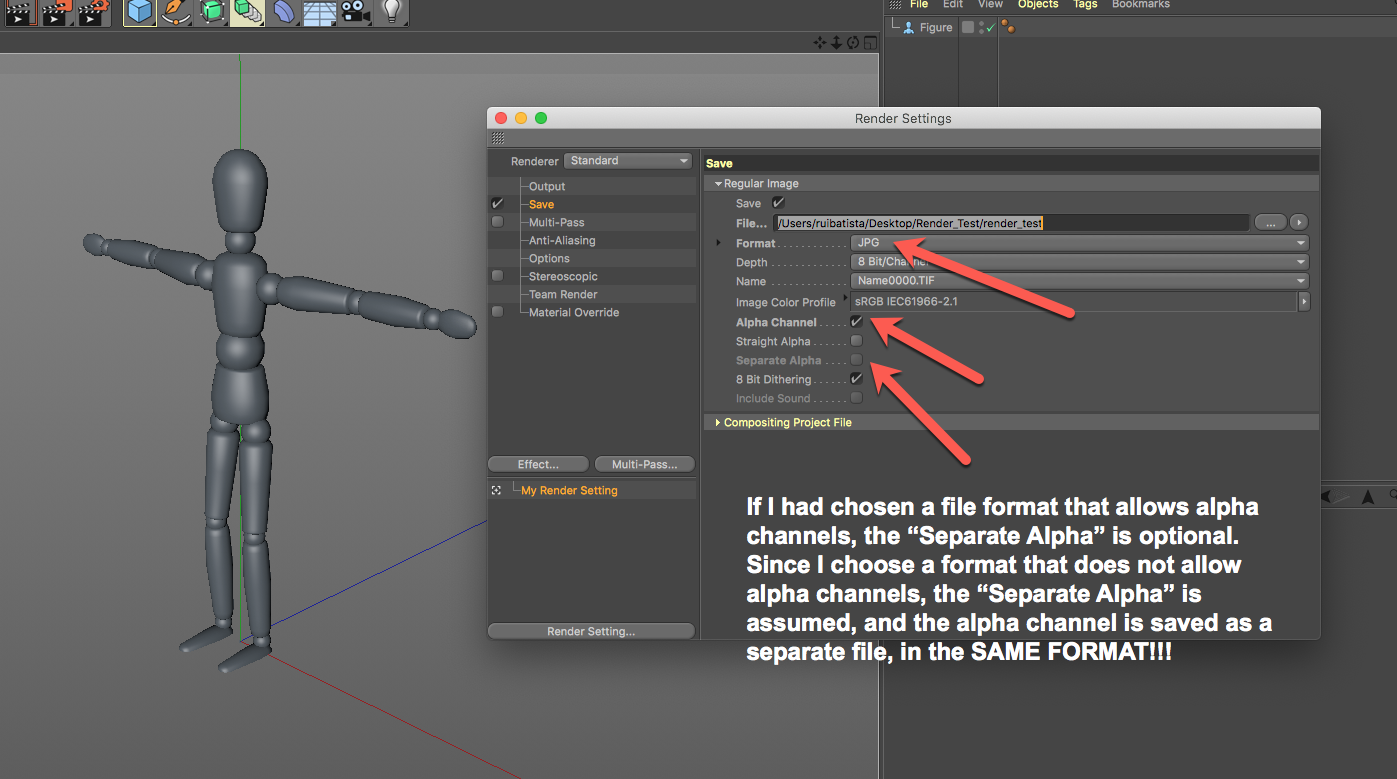

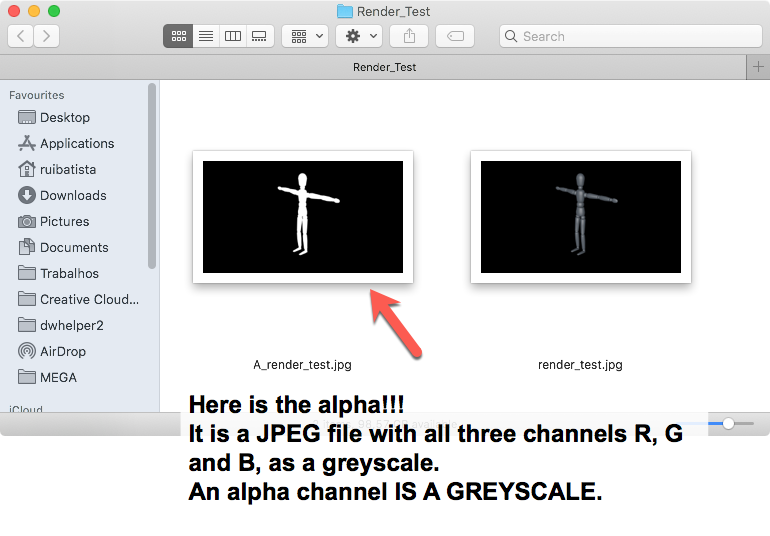

If you create a 3D rendering and ask for the 3D application to save the RGB with an alpha channel and you choose a file format that does no allow for alpha channels (JPEG, for example), you have to save the alpha channel as an additional file (one JPEG for the RGB and one JPEG for the alpha). What type of image will be the JPEG with the alpha? A colourless image? A JPEG without any coloured pixels? Please, tell me what will be in that JPEG.

-

rui_mac reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

rui_mac reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

rui_mac reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

rui_mac reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

We don't care about it. A mask/alpha/key, whatever you decide to call it, is a LIST OF NUMERICAL VALUES, just like a greyscale image is, and just like any of the individual Red, Green, Blue, Cyan, Magenta, Yellow, Black, Duotone, Spot Color, etc, channels are. If they are all lists of numerical values and I can manipulate them with paint/editing tools, I should also be able to do the same with the mask/alpha/key channel. If I can show any color channel as a greyscale, and ALSO A MASK/ALPHA/KEY channel as a greyscale, why should it be possible to dodge/burn/blur/sharpen/smear, etc, any of the color channels and not a mask/alpha/key channel? Photoshop (and most other applications that edit bitmaps and mask/alpha/key channels) don't do that distinction, Internally, a mask/alpha/key channel is exactly the same as Red, Green, Blue, Cyan, Magenta, Yellow, Black, Duotone, Spot Color channel. It is incredible that after so many years you still defend that they are different.

-

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

influxx reacted to a post in a topic:

Why does Dodge and Burn tools don't work on masks?

-

rui_mac reacted to a post in a topic:

Blend tool in Designer

rui_mac reacted to a post in a topic:

Blend tool in Designer

-

Blend tool in Designer

rui_mac replied to Athanasius Pernath's topic in Feedback for the Affinity V2 Suite of Products

I didn't knew VectorStyler. It looks really nice. I wish Serif adds something like this to Affinity.- 219 replies

-

- blend tool

- blend

-

(and 1 more)

Tagged with:

-

Blend tool in Designer

rui_mac replied to Athanasius Pernath's topic in Feedback for the Affinity V2 Suite of Products

Here is a video I recorded a few years ago, when Affinity Designer was still at its infancy, showing how great the Blend tool was, in FreeHand. I also recorded a few more videos about FreeHand, since it was such an amazing application, and I wish some stuff was implemented in Affinity, as the way it worked in FreeHand was still better that what Illustrator has now.- 219 replies

-

- blend tool

- blend

-

(and 1 more)

Tagged with:

-

rui_mac reacted to a post in a topic:

Blend tool in Designer

rui_mac reacted to a post in a topic:

Blend tool in Designer

-

rui_mac reacted to a post in a topic:

Blend tool in Designer

rui_mac reacted to a post in a topic:

Blend tool in Designer

-

App quits after installing models

rui_mac replied to rui_mac's topic in Other New Bugs and Issues in the Betas

I click the install button. A progress bar appears and, when it reaches the end, Affinity Photo crashes. And yes, I was clicking the buttons one at a time. -

App quits after installing models

rui_mac replied to rui_mac's topic in Other New Bugs and Issues in the Betas

My Mac has a GPU. Not a very recent one, but it has an NVIDIA GeForce GT 640M 512 MB. As for choosing CPU Only, I did it, but it still crashes after loading any of the models. I don't know if it helps, but I attached the Crash Report. Crash_report.rtf -

When I try to install any of the models, after downloading, Affinity Photo quits automatically. I tried it several times and this happens every single time. I'm on a iMac 2,7 GHz Quad-Core Intel Core i5, running Catalina 10.15.7

.thumb.jpg.2ac1b0424a6896c349d3d16eea40c7f3.jpg)