dkallan

Members-

Posts

13 -

Joined

-

Last visited

Everything posted by dkallan

-

Just saw this thread after replying in another topic. This is even more relevant than where I replied. While familiar with Affinity Photo for DSLR photos, I'm now just dipping my toes in the water for 360° photography. The output of my 360° camera's stitching software is a "raw" DNG that preserves the full dynamic range of the two sensors' data (and for HDR images, I have the option to export multiple DNGs for an HDR Merge). Naturally this opens in the Develop persona. The Develop persona (just asked for a 360° "preview" option there) works perfectly with some adjustments: Exposure, Enhance, White Balance—I think even Curves does its job—without creating a seam at the edges. But if I play with Shadows/Highlights or Tone, I can end up with a gnarly seam. Same with HDR Merge. If I wait on adjusting tone or shadows/highlights until after development, I lose all that beautiful latitude from the raw image. I don't know of a way to duplicate/wrap around the edges of a raw DNG file and still have it be a DNG. Layer live projection is only available in the Photo persona. Has anyone found a better solution?

-

For those of us starting to do more 360° photography, it would be a tremendous help to allow for a live projection "preview" of a raw equirectangular image in the Develop persona, similar to the existing layer Live Projection capability in the Photo persona. Today, the Develop persona shows a raw 360° equirectangular image not as it is intended to be viewed, but only as it exists on disk: a flat rectangle with its inherent polar distortion and two finite sides that are intended to tile together seamlessly. When developing such an image, we often need to make color and tone decisions based on portions of the image that are inconveniently located near the distorted top and bottom or split across opposite sides of the rectangle. The ability to toggle a "preview" of the raw image as a live 360° projection, seamless and undistorted, would enable easier and better decision-making during development. Affinity Photo already supports a live 360° view of an equirectangular projection layer within the Photo persona, so it should not be a tremendous effort to offer a similar "preview" during development. For more details, this is discussed in the topic Live Equirectangular Projection in Develop Persona.

-

Thanks, @kirkt. I will use the Feature Requests forum. Appreciate your advice on caution with adjustments. The raw 360° photos I have developed in Affinity Photo look surprisingly good for what seems like a toy camera (no issues with mismatches along the "seamless" edges). But that 360° preview capability in Develop would make life easier from a decision-making perspective. Because the live projection is layer-based, there are some special considerations with adjustment layers, but that's later in the workflow anyway. Yes, the stitching software is very basic for raw photos: take in an unstitched DNG, check the stitching and horizon, spit out a stitched DNG or stitched DNG+JPEG (no color adjustment, no offset/shifting/recentering of image content). After it spits out the stitched DNG, that software has no more role in the workflow—it's all in the hands of other photo software to grade/develop the DNG and do other adjustments. Although there's no "feedback loop" for this particular stitching software, your Smart Objects idea would be useful in so many other applications. Keep up the crusade!

-

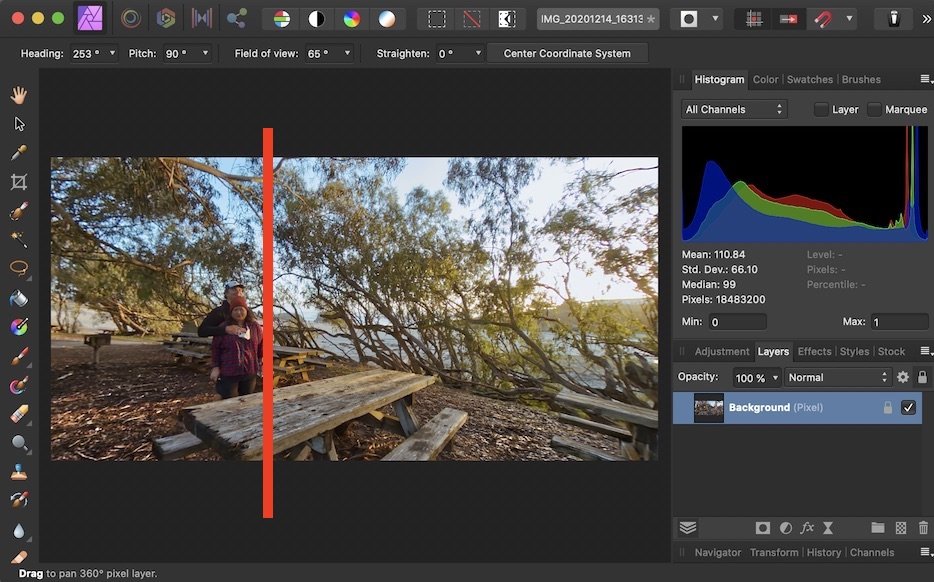

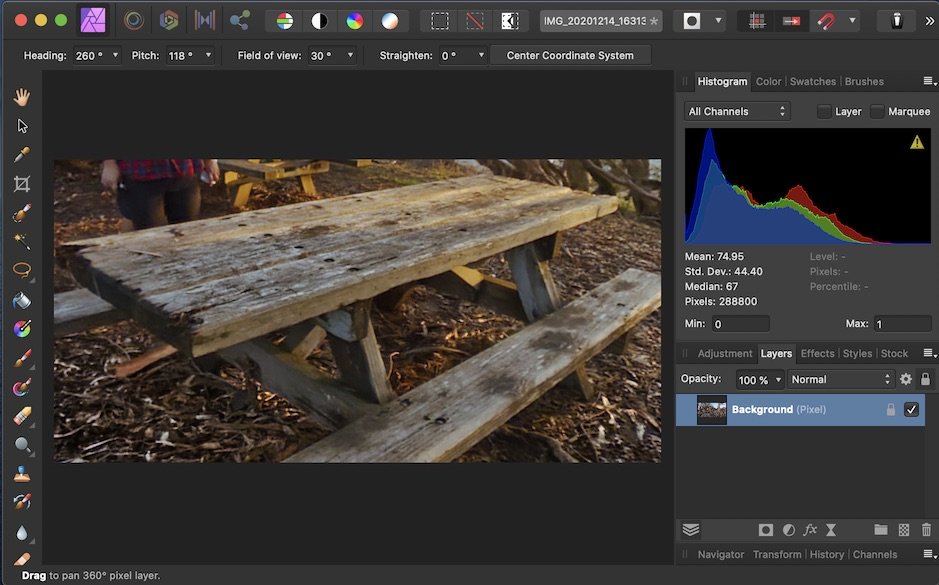

Rather than get caught up in terminology, which I may get wrong, I’ll show you what I mean. Recent Insta360 cameras, while not exactly the paragon of high quality like my DSLRs, do produce raw photos. As with all cameras, sensor data passes through some sort of signal processing path before becoming a “raw” file. In this weird new Insta360 world, raw file processing occurs in two stages: pre-stitched and stitched. Maybe the Insta360 stitched DNG should be called a “pseudo-raw” format, but as far as image editing apps go, they see it as a raw image complete with lens info and metadata. Here’s what happens: When the camera takes a photo while the capture mode is set to “JPG+RAW”, it creates two files on the camera card: a DNG file containing the concatenated information from both sensors, and a proprietary “INSP” file containing the compressed JPEG+metadata with all sorts of automatic color, tone and detail processing performed in-camera and in the off-board camera app/studio. (In JPEG-only mode, only the compressed INSP file gets created, and the raw data is discarded.) The Insta360 smartphone app can easily extract and process the JPEG from the INSP file, but it does not deal with DNG files. DNG files can only be handled by photo apps and the Insta360 Studio desktop app—and the desktop app is needed for stitching. Taken immediately off the camera card, the DNG file is unstitched, flat in color, and looks something like this: [SEE PHOTO 1] The DNG must be loaded into Insta360’s computer-based stitching app, where stitching is first attempted automatically and then subject to manual inspection and adjustment (stitching calibration, lens distance, horizontal correction). From the studio, the user exports a stitched “raw” DNG in 360° equirectangular with no color correction—or call it a “pseudo-raw” DNG, as though coming from a virtual single 360° camera sensor that projects spherical data equirectangularly. [SEE PHOTO 2] As far as any photo post-editing application is concerned, this pseudo-raw DNG is a raw file. Affinity Photo and Photoshop will only open it in Develop mode. In Develop persona, the equirectangular projection is displayed flat, with the inherent visual distortion toward the poles and the clipped edges of the way the image is stored but not the way it is viewed (which would be seamless and distortion-corrected in a live scrolling viewer window or VR headset). In developing this photo, I have started playing around with color, tone and detail, but in doing so I really want to pay attention to the couple and the table they’re by, as well as the tree tops overhead. However, note the red circles: the couple/table happens to be cut off by the edge of the flat image, and the tree tops show the effects of polar distortion. I cannot see both sides of the table seamlessly, and I have to pan to either extreme edge of the image to view one part of it or the other. [SEE PHOTO 3] After developing, however, I can view the photo with live projection. The red line approximates where the sides of the file actually occur. In live projection, the table is now seamless, the treetops fairly undistorted. Granted, this lame photo isn’t a perfect example, but hopefully it hints at why it is so valuable to preview an image with live projection seamlessly during Development. [SEE PHOTOS 4, 5, 6] @kirkt, all of what you propose with Smart Objects sounds cool and quite transformative. But for the sake of my simple workflow, all I’m looking for is really basic: just the ability to preview and scroll an equirectangular image seamlessly and without distortion in Develop the way I can with Live Equirectangular Projection in Photo persona. The live projection ability already exists in AP,—it just isn’t enabled as a preview in the Develop persona.

-

Hi Kirk, Not checking for stitching errors and blending: as you say, that is already done in the stitching software before exporting the raw DNG. I’m talking about viewing the pre-stitched DNG projected equirectangularly in Develop mode so that I can see undistorted detail seamlessly and make better color/curve/detail decisions, even along the stitch lines. The view is supported in Photo persona—it would be extremely helpful to view it the same way while developing.

-

LUT Organizer/gallery

dkallan replied to Cristophorus's topic in Feedback for Affinity Photo V1 on Desktop

+1 for sure! -

I have starting doing some 360° work, and my camera/software exports equirectangular 360° photos in raw DNG format. The first step of any raw image is to grade and develop in the Develop Persona, but unfortunately I have found no option to view the raw image with a live equirectangular projection whilst in that persona. When developing, the ability to toggle between normal and live equirectangular projection is critical, especially when scrolling/zooming/examining close-up details and stitched areas seamlessly, without borders or distortion. The Layer > Live Projection > Equirectangular Projection works great in the Photo Persona. There seems to be no reason that live equirectangular projection shouldn't be supported in Develop, for all standard, Split and Mirror views (and presumably, activating this view in Develop would automatically apply live equirectangular projection for the base layer after developing). Am I missing some simple view setting, or is this a current limitation of the Photo app? If the latter, is it on the roadmap?

-

When using the Navigator view while zoomed in on an image, I find myself constantly reaching for the zoom boundary box to drag it around the Navigator--seems like a natural and intuitive thing to do--except that the box doesn't drag. I have to fall back to a two-fingered drag on the canvas itself, which sometimes affects my zoom level. I know it's not entirely precise, but being able to drag the zoom box in Navigator would be a great addition to the experience,

-

Any chance of getting an "Original Image" at the top of the history list? The slider is a bit finicky, and it would be nice to have a clickable initial stage at the top of the list....