fde101

-

Posts

4,982 -

Joined

-

Last visited

Posts posted by fde101

-

-

Thanks, and yes, I have also pointed out the possibility of using KM for various things people were asking for, but it is not really ideal in a lot of those cases with the current relative lack of keyboard shortcut / scripting options in the Affinity products.

Some things it can do very well, others it can kind of act as a kludge. The addition of scripting support should significantly improve the possibilities.

Perhaps a moderator could move this thread to the Tutorials section - but at least this helps to explain what the thread was meant for.

-

I'm a bit confused by this post... are you posting a tutorial/example for other users (in which case it belongs in the tutorials forum - https://forum.affinity.serif.com/index.php?/forum/9-tutorials-serif-and-customer-created-tutorials/) or are you asking for Serif to somehow connect with the developers at Keyboard Maestro to provide enhanced integration with their product?

There is already a thread discussing scripting/plugin support pinned at the top of the feature request forums; that is coming eventually. Any tie-ins with Keyboard Maestro or other platform-specific utilities would most likely need to be derived from that more generic scripting support. I would not expect Serif to provide direct specific support for KM or other similar utilities, but the plugin support may provide a mechanism for the KM developers to provide a better tie-in with the Affinity products, at which point you could request that of them.

You may not even need that plugin to make more effective integration possible, however, as KM can already execute arbitrary AppleScript code, and Serif suggested at one point that while they seem to have decided to use the relatively pathetic ECMAScript language as the basis of their scripting support, they will at least provide one AppleScript command to execute provided ECMAScript code against their scripting engine, so that might provide the hook needed to perform tasks more directly using the AppleScript support in KM.

-

35 minutes ago, Alfred said:

if you're using polar coordinates the angle decreases when going clockwise.

I would imagine that more people know how to read an analog clock than polar coordinates.

From a mathematical standpoint the cartesian coordinate system rises up from the baseline also but we don't normally work with software having the ruler measure from the bottom of the page by default.

-

Not sure that there is really a case for this as the rotation of the wheel is somewhat arbitrary and varies wildly among different products.

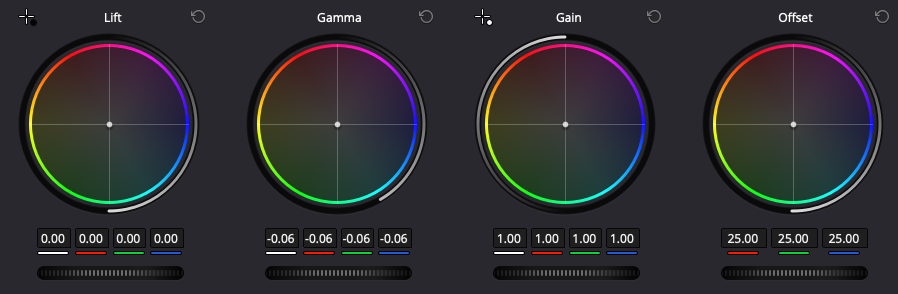

Here is what the wheels look like in Davinci Resolve which is one of the more typical tools used for color grading video:

Here is the wheel in the Apple color picker, with yellow a bit to the right of the top - note that the colors go in the same direction as Resolve and the opposite direction from the Affinity products (the Affinity products go purple-red-orange-yellow clockwise, these go counter-clockwise):

Capture One is organized like the Apple color picker:

So is GIMP:

Corel Painter - note that this one places yellow in roughly the same place as does the Apple Color Picker, GIMP, and various others, but the colors run in the opposite direction, as they do in the Affinity products:

With the wide variety of orientations that are used, no matter what the Affinity products decide on, they are going to be different for *someone* who goes back and forth between them and some other relevant product, so there is not really any point in trying to sync it to anything in particular.

- loukash, charactersword and Pšenda

-

1

1

-

2

2

-

Without having dug in too deeply, my guess would be that either softness or light bleed around the edge of the product may be tricking the algorithm that detects where the focus changes and causing the software to perceive that part of the image as being background, thus blurring it.

It may be possible to improve the algorithms to better detect those edges, but it is unlikely that they will ever be 100% accurate for all cases, so some manual refinement to the edges will likely always be needed in some cases.

- debraspicher and thebodzio

-

2

2

-

On 1/27/2024 at 6:58 PM, tzvi20 said:

We are now almost in February 2024 still no blend tool...☹️

Still no giraffe tool.

-

5 minutes ago, NotMyFault said:

or extend Mac display to iPad and use it as trackpad

This would be the macOS version running under macOS with the iPad as an external display; it would not be the iPad version of the app.

-

Agreed, along with variable fonts, and this has been brought up many times in the past.

For something like this particular example you can use a normal font and make the text a mask for a picture (in this case of a flag) behind the text, but it would obviously be more convenient to use a pre-rigged font like that, and other examples of color fonts may not be quite so easy to mimic in that manner.

-

Serif has stated in the past that they were not happy with the results they were getting from tracing using the algorithms they had on hand and that when they introduce this into the Affinity suite they want to do it right and have something better than what they could achieve right now. There were also hints that they intend to do this in a big way and implement it as an entirely new persona just for this task.

This was some time ago, however, so not clear what direction this may currently be taking - still, I would expect this will come in time, but they are waiting until they can do it in a way that they will be happy with, which I can certainly get behind.

- loukash and languidcorpse

-

2

2

-

We need a "You're welcome" reaction. Responding to "Thanks" with a "Like" reaction seems to indicate "I like being thanked..." which is kind of disingenuous... on the other hand a "Thanks" reaction to a "Thanks" post is more like "thanks for the thanks" which doesn't seem quite right either. 🤔

5 minutes ago, Alfred said:Thanks for pointing that out.

No problem!

-

3 hours ago, Alfred said:

click on a Group

For a Group, you need to select something within the group, not the group itself, for this to work. Alternatively, with the group itself selected, enable "Insert inside the selection" from the toolbar. It does work as @Alfred describes it for Layer and Artboard layers.

-

5 hours ago, Dan C said:

'Lock Children' has been used in the title to match the name of the option

Fair enough, so why not "Overriding Lock Children"?

There are better ways to accomplish this than the grammatical assault.

-

Real flowers are not truly symmetric.

-

On 11/10/2022 at 9:21 AM, rparmar said:

This is one of the most essential features, along with global layers and foot/end note support. I guess we got one out of three?

More essential than text boxes, rectangles and lines?

-

49 minutes ago, MEB said:

so their position on the canvas is relevant - thus the requirement for integer position coordinates when using the Export Persona. Currently only the File > Export interprets them as "independent canvas" and does not require integer position values (only integer width and height).

So... if you have an artboard with a position which is not pixel aligned to the canvas, but it contains objects which *are* pixel aligned to the canvas, then those objects are not pixel-aligned to the artboard.

This would result in modified dimensions when exporting using the Export persona (but not the potentially unwanted aliasing), but aliasing when exporting via File->Export (due to the objects having non-aligned positions with respect to the artboard) though the dimensions would be correct.

While logically this makes perfect sense, it is ultimately a lose-lose situation for what I would assume to be the majority of users.

27 minutes ago, Patrick Connor said:I ALSO feel the artboard tool should always default to creating pixel aligned and whole pixel widths and overriding would require a conscious choice.

Agreed. Also with other mechanisms that may impact artboard size/position.

This can be tricky to get right across the board, however. If the resolution (DPI) of the document changes, artboards which were originally pixel aligned may no longer be aligned. For example, if you change from 200 DPI to 300 DPI, then an artboard which was at (1,1) would now be at (1.5,1.5). Adjusting the position of the artboard to compensate may throw objects on the artboard out of alignment when they otherwise would have been aligned... and the complexities continue.

- Alfred and Patrick Connor

-

1

1

-

1

1

-

Making the header areas a bit darker and making the active tab names and collapsible area titles bold would probably be small changes that could go a long way toward improving the navigability of the panels. I find the current scheme sufficient for myself but I can easily see that others may have more trouble with the current scheme.

I still contend that adding color to these areas would be a mistake.

-

10 hours ago, Viktor CR said:

Something like this would be better:

Not really, because it introduces an entire area of color to the interface where it is not needed. More contrast may be good, but the overall cast of the bulk of a professional UI for an application which deals with color should be kept as neutral as reasonably possible, to avoid throwing off the user's perception of the color in the elements within the document.

With some things, such as color wells, there is no choice. Small amounts of low-saturation color in a few places such as icons may be OK for many users, but it is good to have an option to disable that and make them gray, which the Affinity products do offer in Preferences.

- Murfee, debraspicher and Pšenda

-

3

3

-

On 2/2/2024 at 9:31 PM, Bit Dissapointed said:

I can say that in the heat of the moment, I often end up applying the color to the wrong attribute (stroke or fill) because I'm not paying full attention to which of the two is on top, and it's equally frustrating every time.

Maybe an indicator could be added to the mouse pointer when over the wheels showing a filled rectangle or an outline to indicate whether the fill or stroke is being set?

-

13 minutes ago, GarryP said:

a questionable idea to have such an apparently ‘destructive’ functionality available, by default, via such an easily-pressed key

Thing is, being on such an easily pressed key is what makes the feature so useful. You can quickly toggle the visibility of much of the UI in order to see more context around what you are working on, then quickly bring it back. It is probably more valuable on smaller screens than larger ones, but regardless, it is a nice touch once you understand it.

3 hours ago, DelN said:Wow! Yes, thanks, Walt. It was that - and they're all back now from the darkness. Strange, because I've never hidden them. I didn't know you could...

When you first open any of the Affinity applications the welcome box offers a link to a set of video tutorials, the very first of the "Basics" tutorials is an overview of the user interface in which various ways of accessing and arranging panels, as well as the use of the tab key, are all explained:

-

22 minutes ago, Chills said:

That FlatPak is even needed highlights the problem.

Thing is, when you look at the server side of things, the Linux market share has quickly grown to be much larger than any of the traditional UNIX systems, mainframe environments, or other traditional server platforms.

Most of the IBM mainframe operating systems originally shipped with source code (in assembly language no less) and each site would customize their installation to their needs by modifying the code and rebuilding the system based on those modifications - things like assigning ranges of device numbers to different types of storage devices or terminals would be handled by updating an assembly language file and rebuilding the nucleus (kernel) of the system.

Software that worked in one environment may not have worked in another, even if they were running the same version of the same operating system, as they could be configured very differently from each other.

It was not uncommon for newer versions of a UNIX system or of many other even commercial operating systems to break compatibility with older software and require a recompile at least. In fact one of the main selling points of the Solaris operating system (Sun's UNIX platform now owned by Oracle) was the "application binary compatibility guarantee" - that software compiled on an older version and correctly using documented APIs would continue to work on a newer version of the operating system. This was one of the ways they distinguished themselves in the market.

The issues you are citing as being "Linux" problems have been commonplace and normal throughout computer history, including in the commercial operating systems that Linux was originally created to mimic.

When Windows NT was originally released, it could run OS/2 software and had a POSIX environment (different from WSL), both of which are gone now. The original Windows versions could only run 16-bit applications, but the 64-bit versions of Windows have dropped support for 16-bit Windows applications completely. Solaris actually has better compatibility with older software than Windows does, yet it has been marginalized on the market when compared to Linux.

Yes, the software compatibility issues are a disadvantage that Linux has over *some* commercial platforms such as macOS, Windows and Solaris, but macOS and Windows are hardly immune to that either, and I'm not sure that this is really a major reason why its desktop market share is still relatively small.

A lot of people go out to the store to buy a computer, and what comes on it? Many of them just use what is there, not necessarily knowing any better, and never bother to upgrade to Linux or some other superior platform. Windows is what gets shipped on the computers, thus it is what people use when they simply don't know any better, and thus what they learn to use. If you want to fix the market share, get more Linux-based computers out into the stores where people can see and buy them. Now you have a range of new problems to contend with...

- affinity-chicken and Alfred

-

2

2

-

16 minutes ago, RichiePhoto said:

Wow, the Mac market is smaller than Linux, yet they have a Mac version available

That is again, Steam users, not computer users. Steam has their own Steam Deck which runs Linux and would be counted as part of the Linux percentage, and the Linux version of Steam has the benefit of Proton, which can run many otherwise Windows-only games, a feature they do not make available on macOS, so those numbers are likely skewed.

Another thing to consider is that some game engines only make their development tools available for Windows even though they produce games that run across multiple platforms; this same issue exists with a few other development environments, and since those who are involved in development of games also tend to need to do artwork, it can further skew the availability of the produced games, since during development they will be doing most of their testing on the platform they are actually developing on, making that the most likely platform to be well-tested and released for...

It is a vicious cycle which makes it hard for anyone new to enter the market regardless of technical merit, and the fact that many companies are often more concerned with profit than with actual technical superiority or quality of life of their users does not help matters either.

This is one of the benefits of the Linux philosophy: open source projects often eliminate profit from the equation, allowing for the development of a product that can focus on technical merit rather than on how big the user base is. Of course this is a double-sided coin, as developers need to earn a living, and if there is no profit in it, they can't devote the time to the project that they would need to in order to flesh it out the way they may want it to go. As a result, very few of the projects progress as well as many would like, users on the outside perceive open source as somehow inferior, and we wind up with long and ultimately pointless forum threads like this one.

-

Just to point it out, 1-2% of the Steam users using Linux, does not equate to 1-2% of the desktop market using Linux.

Not everyone plays games on their computers, and not everyone who does play games uses Steam.

I happen to have Steam on all three platforms: I have a steam deck (Linux), multiple copies of Steam installed on Windows (under CrossOver on my Mac - granted "fake" Windows but it would still be counted as Windows in the statistics, in a virtual machine which crashes when I try to run 3D graphics, and on an older Windows laptop that lacks the performance for some of the games I play), and under macOS.

Given the choice, would I rather play a native macOS game (maximum performance on my most powerful computer), a Linux game (native on my Steam Deck), or a Windows game (in which I may need to try it in several places to finally figure out where it sort of works)?

If you go purely by the numbers, I am among those contributing to the statistics being extremely misleading. Sure, I have it installed in places that would be counted as Windows in the statistics, but I would 100% prefer a native macOS game, or failing that one under Linux, over one for Windows. I would even look for it on my Nintendo Switch or some other console before getting a Windows version if the game is available for the Switch.

Consider also that I very rarely use Windows on one of my personal computers for anything other than playing a game (the rest of the time being to update security patches or run some program that is Windows-only (something that is already a rarity for me and is becoming even more so over time), which means that as more of the games I am interested in become available on other platforms I have access to, there will be less and less reason for me to bother with running Steam on Windows. I suspect there at least some others in this same position, and the Windows "dominance" in the gaming market would be reduced by some non-trivial amount if more games became available natively on other platforms.

As much as I suspect this is true for gaming, I suspect it is even more true for many of the creative markets. This is mostly a problem of momentum, but that momentum can be hard to swing.

-

5 minutes ago, albertkinng said:

The key takeaway here is that as a developer, you're not strictly limited to the default behaviors provided by the development environment.

True, and when developers leverage this ability to create arbitrary changes to apps which break with conventions that are standard to the environment, it almost always results in sub-standard applications which do not fit in with the rest of the environment.

A macOS user who is accustomed to finding that option in that location in that menu will look for it there in other applications as well. Being the one application that makes them go hunting for it when it can be readily found in the same expected place everywhere else is not an act if kindness to your users.

- Old Bruce, garrettm30, albertkinng and 1 other

-

3

3

-

1

1

-

Bad idea, at least on the Mac, because this is a standard macOS convention used by many other apps, both from Apple and from third parties.

If I create a new document-based SwiftUI application for macOS using Xcode, there is zero code or configuration in the application itself to even have an "Open Recent" menu at all, much less with a "Clear Menu" command, yet the framework creates it automatically, it is so inherent to the nature of a macOS application.

Yellow up color wheel like other apps

in Feedback for the Affinity V2 Suite of Products

Posted

That is not quite what was being asked for, but the request was a redundant insertion of an irrelevant topic that is already being covered on another thread so likely best ignored here to avoid further polluting this thread.