CM0

Members-

Posts

309 -

Joined

-

Last visited

Everything posted by CM0

-

AI generative Fill in Affinity

CM0 replied to WMax70's topic in Affinity on Desktop Questions (macOS and Windows)

I generally agree as Affinity can't work on everything and if I need AI gen fill I can find it for free just as I use InkScape for autotrace. I'm assuming that since Affinity is already doing extensions/scripting support then AI will indirectly become available from that effort. I just wish Affinity could focus on the defect backlog. I would become so much more productive if my defects would ever be fixed. I'm happy to see it seems they are spending some quality time with Publisher based on the number of fixes I see, but a lot of old bugs everywhere else could really use some attention.- 461 replies

-

- artificial intelligence

- ai

-

(and 3 more)

Tagged with:

-

AI generative Fill in Affinity

CM0 replied to WMax70's topic in Affinity on Desktop Questions (macOS and Windows)

Yes, their major advantage is already lost. There are already free versions. No need to switch and pay for it. MS just now added it even to MS Paint in windows 11. If you want selections combined with generative fill, then Krita has it for free. The reason Krita has it is because they have good plugin/extensions. Which continues to be my argument for Affinity. They are already working on it. It is the best path forward in this crazy rapidly changing landscape to simply allow integration with whatever will be coming.- 461 replies

-

- artificial intelligence

- ai

-

(and 3 more)

Tagged with:

-

Move data entry improvements

CM0 replied to Ash's topic in [ARCHIVE] 2.4, 2.3, 2.2 & 2.1 Features and Improvements

It could be really useful and powerful to allow expressions in the input fields where there is a variable 'n' for the current instance number of the copy. You could then create some interesting patterns. -

AI generative Fill in Affinity

CM0 replied to WMax70's topic in Affinity on Desktop Questions (macOS and Windows)

That is more of an argument to not include such plugin in Affinity. The video is even making the argument the plugin is better than Adobe's generative fill. Instead, we just need the scripting/plugins API for Affinity. That is how Alpaca was made available for Photoshop and Stable diffusion for Krita etc. No company can keep up with the pace of AI development. Whatever they include will be obsolete almost by the time they ship it.- 461 replies

-

- artificial intelligence

- ai

-

(and 3 more)

Tagged with:

-

@NathanC Could we then submit this as a feature request. It is still a dimensional change and would likely be a relatively simple change to support as the mechanism already exists. This would allow for creating some automatic scenarios of special text highlights and automatic erasure of items underneath the text by syncing a object in erase mode underneath the text which was an effect I was hoping to achieve.

-

It is possible to link a group with text or just plain text object to another object and transforms work correctly when resizing with the object handles. However, if the object size changes due to a text change, then it does not sync. Attached sample: Modify either object by handles and it resizes in sync. Change the text and the text object changes size but not the linked object. Publisher 2.2.1 / Windows 10 Linking broken with text changes.afpub

-

Nice addition! BTW, it would also be useful to be able to reverse the stroke path when using brushes.

-

We are live, and thank you!!!

CM0 replied to Ash's topic in [ARCHIVE] 2.4, 2.3, 2.2 & 2.1 Features and Improvements

Not sure if anyone is doing that, but yes that would not be appropriate. As someone who has led large development teams myself and worked on very large projects. It is really impossible to know from the outside what are the internal inhibitors to greater efficiency and product reliability. Usually such matters are result of leadership, depending on size of company, but often upper management and executives. They are ultimately responsible for the operations. You can almost be certain the devs and test teams want the issues fixed as well. But they probably don't get to set the ultimate priorities. -

We are live, and thank you!!!

CM0 replied to Ash's topic in [ARCHIVE] 2.4, 2.3, 2.2 & 2.1 Features and Improvements

I think they do care. But marketing works on those who don't know about the bugs. The irony is that the bugs tend to hurt the most dedicated users. The ones doing the most advanced work. For example, not many here use Artboards. I use exclusively artboards, so most don't encounter the unbelievable number of bugs that are unique to artboards. Almost all of the live filters are broken on artboards. I've been meaning to write something up about it, but haven't had the time and my motivations are low as so far no bugs I've ever opened have been fixed as of yet. -

AI generative Fill in Affinity

CM0 replied to WMax70's topic in Affinity on Desktop Questions (macOS and Windows)

True, but Affinity at least has potential opportunity here by not already having an API they have complete flexibility in design, in that they can observe what is happening in the world with AI and the use cases and ensure that such integration will be possible, highly efficient and rich in capability.- 461 replies

-

- artificial intelligence

- ai

-

(and 3 more)

Tagged with:

-

AI generative Fill in Affinity

CM0 replied to WMax70's topic in Affinity on Desktop Questions (macOS and Windows)

lol, yes the buzzword economy. Nothing delivered what it promised. Usually it just delivered the exact opposite, complexity that only hurt your business. I remember turning simple FTP batch scripts that cost nothing to execute into monsters of BPEL SOAP services just because executives wanted to promote advanced tool and business process adoption. A script that took someone a few hours to write turned into half a year project for an entire team. Madness.- 461 replies

-

- artificial intelligence

- ai

-

(and 3 more)

Tagged with:

-

AI generative Fill in Affinity

CM0 replied to WMax70's topic in Affinity on Desktop Questions (macOS and Windows)

Adobe's edge is quickly evaporating as well. Meta is now embedding AI generation directly into their social media apps and many other standalone AI generators are adding their own generative fill. The usefulness in regards to productivity can not be ignored for the users, but the business model for sustainability is going to be very challenging as any advantage is quickly lost. Thus is the nature of rapid AI development. Therefore, my opinion in these regards is that it would not necessarily be beneficial for Affinity to spend its limited resources in a futile effort to keep up with its own implementation. They also would have to deal with as-of-yet-unresolved legal issues. However, their scripting API would provide the necessary integration which avoids these limitations. 3rd parties and open source communities are going to be far faster at rapid innovation and keeping up with AI.- 461 replies

-

- artificial intelligence

- ai

-

(and 3 more)

Tagged with:

-

We are live, and thank you!!!

CM0 replied to Ash's topic in [ARCHIVE] 2.4, 2.3, 2.2 & 2.1 Features and Improvements

I think this view significantly diminishes the value and importance of bugfixes. Bugfixing in effect is like having new features as it often means you can now do something you could not before. You can market and promote these as improvements just as well and should. Based on the activity of the "Serif Info Bot" recently, seems there has been a lot of bug fixes. This is great! I hope there are many more. I dream of the day I will finally see "Serif Info Bot" comment on one of my submissions :-) -

Thank you. Let me just clarify what I would hope could happen in this case. This issue, as seen by Affinity, is not a bug; however, it is also not a "nice to have" as seen by users as it is an issue that is breaking the user experience. Behavior that is misleading in a way that some could say is displaying wrong or incorrect information. Any user who experiences this will immediately think "bug" or something is broken. To simply categorize or place it alongside all the other new feature requests seems out of place. So yes, if features aren't tracked, then developers simply browse features as they have time, which I'm certain is extremely limited. This request was likely long forgotten with its importance to users. I am fine with letting the developers come up with a solution. Overhaul, new view mode or something else etc. Suggestion: I suppose a suggestion for Affinity to please consider would be a new tag for some features that are in the classification of "breaking user experiences" or "unexpected behaviors". I think this might be the optimal middle ground as it allows developers to find and consider such issues and prioritize them separately from the "nice to have" new features. This way, as a user, I would at least feel that the information I have delivered has been correctly understood as to its implications whether it is ever implemented or not :-)

-

Also as previously explained this is not a work around. There is no reality in which the UI lies to the user that is desirable or would not be seen as a bug to users. I understand this is by design, but it is severely impactful in a negative way to our work and dismissing its importance that it is by design is extraordinary frustrating to users. Previously you had expressed interest in solving this issue and I appreciated that interest. Exactly how does a request ever get picked up by developers? That request has indicated it is impactful to many users. Has this been logged for development into their tracking system? Often we are told development will take a look at certain issues, is development even aware of this discussion?

-

@Chris B Could we please get a proper issue logged with development? It seems as @NotMyFault has pointed out, this has been an issue reported by many people, but likely confusingly miscategorised. Please take note of this comment on a previous post - This thread in the above issue indicates that it is impactful to many people and likely contributes to lots of miscategorised issues/bugs. The previous issue has been tagged feedback for v1 so probably is not getting the focus and priority that it needs. If we could get focus on this better described problem, then we can close or consolidate proper notes into one issue to log with developers.

-

Thank you for the reference. I suggested exactly what you stated earlier in this thread. We need at least a preview mode for accurate rendering. Unfortunately, there a lot of such issues that don't get the priority they deserve as they are so poorly understood. Many likely have encountered such problems, don't know how to describe it and goes without being reported or it is diluted by being reported under what would seem unrelated topics such as how this thread started.

-

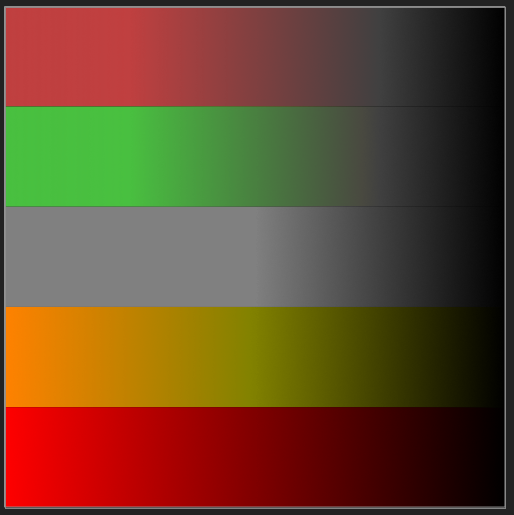

In my continuing efforts to break down and document the sometimes odd Affinity behavior, I'm now focusing on the peculiar blending behavior of passthrough mode. Previous explanations have been something like "it is due to blending order" However, if this is the case, we should be able to manually replicate the exact same behavior. I have been completely unable to do so. I can not replicate, nor explain it. Furthermore, the behavior would not seem to be expected or desirable. This is a sample using passthrough And this is the same using normal There are specifically 2 problems (bugs) with the previous image: Colors are deformed. There seems to be desaturation or some additional oddities. Pure red blends normally. However, anything with mixed colors or partial grays results in undesirable color transformation. There is banding in the image. It seems the underlying smooth gradient is somehow clipped and is no longer a smooth ramp. This is most evident in the middle gray layer. If you sample the colors, you will see the values of gray do not change at all until you cross the midpoint of the image. These conditions only occur when we have a mask or erase mode layer like the following: The mask also must have partial transparency. It is the area of partial transparency that produces the unexpected results. But this must be done with a mask, as if we place an image with partial transparency it will blend fine. I had assumed this is likely due to the mask being applied to the underlying layer ( in the above would be fill layer ) as part of blending/rendering and then blending a second time as part of the group with the fill again. However, you can't reproduce this effect by manually doing these steps. No such combination of doing that with any blend mode available in affinity will result in what we observe with passthrough. No matter how you blend with either the black fill or an empty layer or multiple merges results in what we observe. Additionally, if you add the mask as a child layer of a child, the problem still occurs and in that configuration the mask should not be affecting the group's underlying layer. So then what is happening? I've attached the sample document for anyone that would like to attempt to further explain. What to look for and when to use work arounds: Anytime you are using passthrough combined with some type of applied transparency (mask, erase mode) you likely will not get the expected result. Consider ... Don't use passthrough. Break up your composition in some other way. This might be difficult depending on your composition. Create copies of your mask and apply to every layer individually that is a child of the group layer. The mask must be attached to the masking position. If added as a child layer of a child layer the problem will resurface. This is another odd behavior. passthrough mode blending.afpub

-

Being able to see in realtime the effect of all the selection modification tools in quick mask mode. They are all currently disabled or either they don't update while viewing in quick mask. Take 'select sampled color' as the best example because it is the hardest to use. You can toggle quick mask while the tolerance slider is visible, but it will not update until you click 'apply'. If it is not the result you want, you have to start over.